AI editor interfaces usually fall into one of two buckets: (1) back-and-forth chat, or (2) drag-and-drop canvases. That’s why I wanted to explore building a different AI generation interface at the first Gemini Nano Banana hackathon (and won 4th place!).

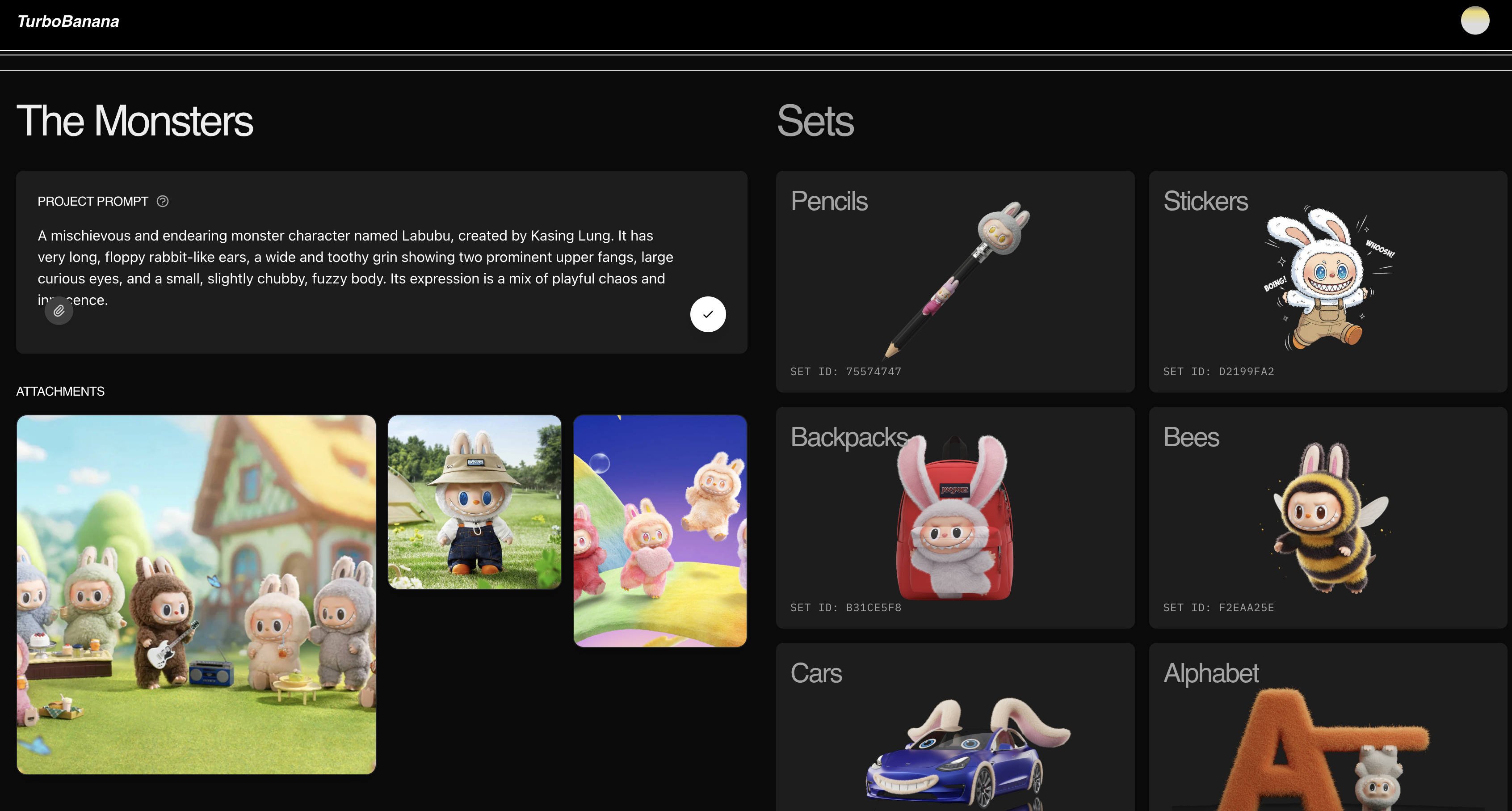

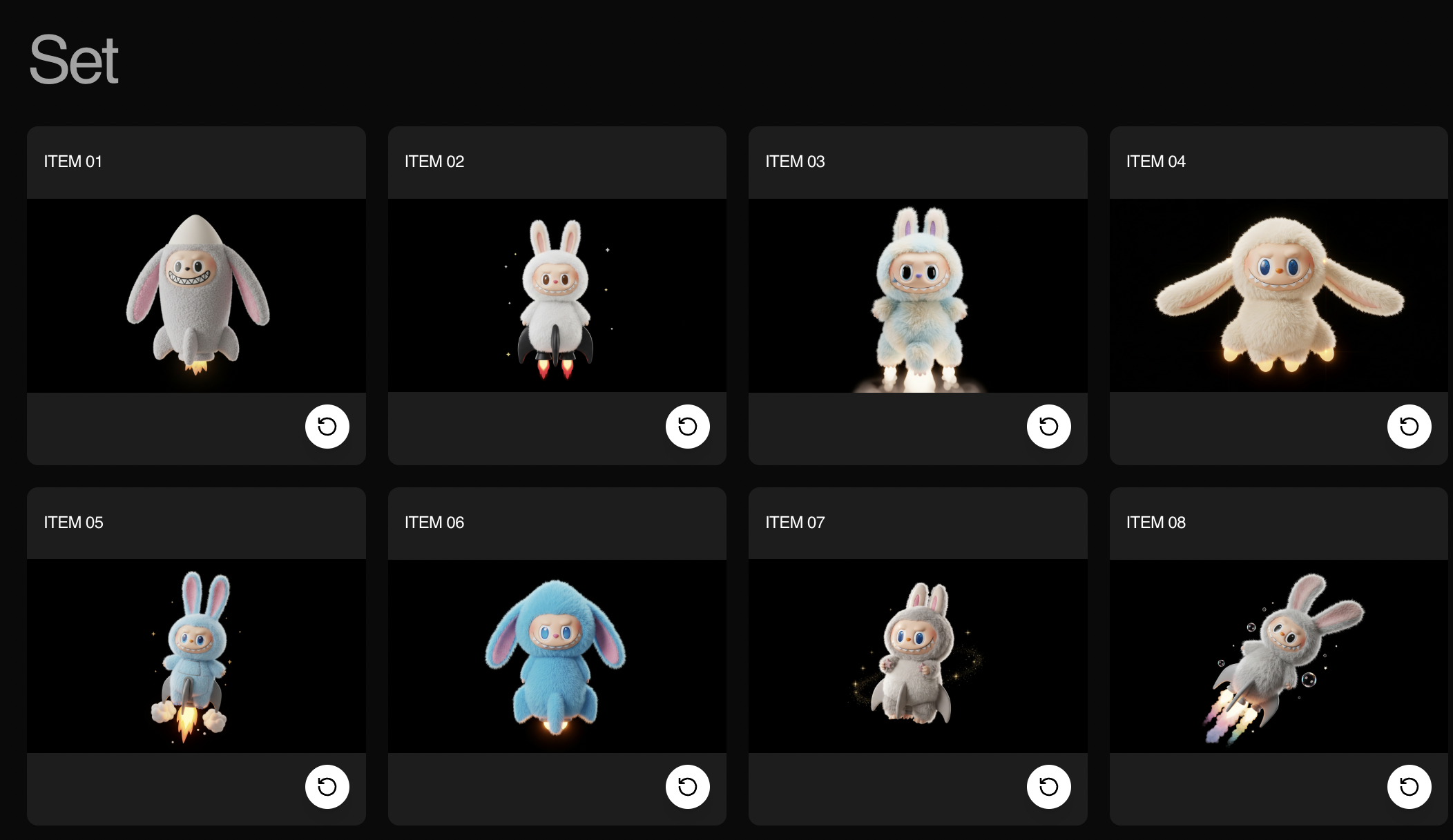

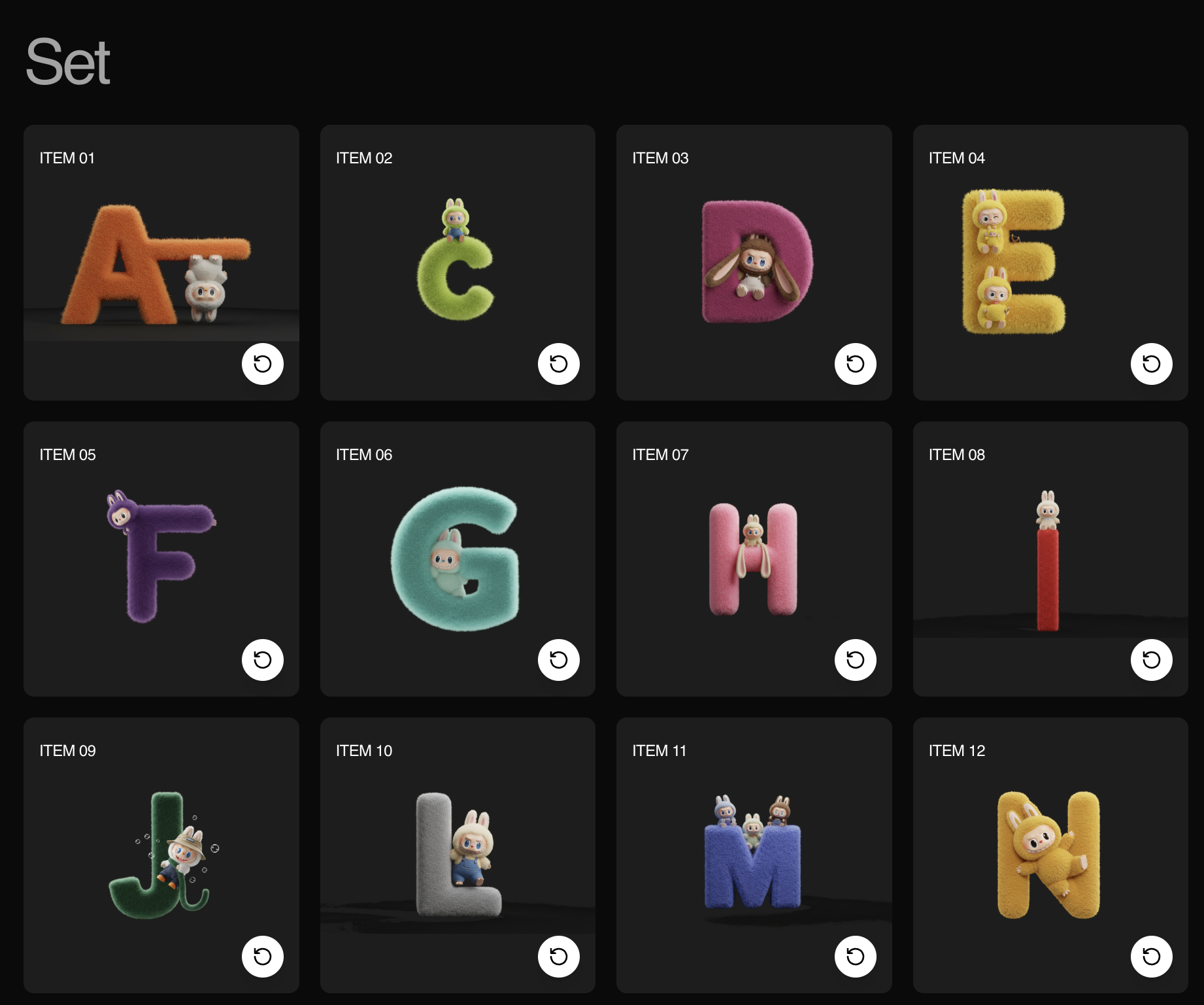

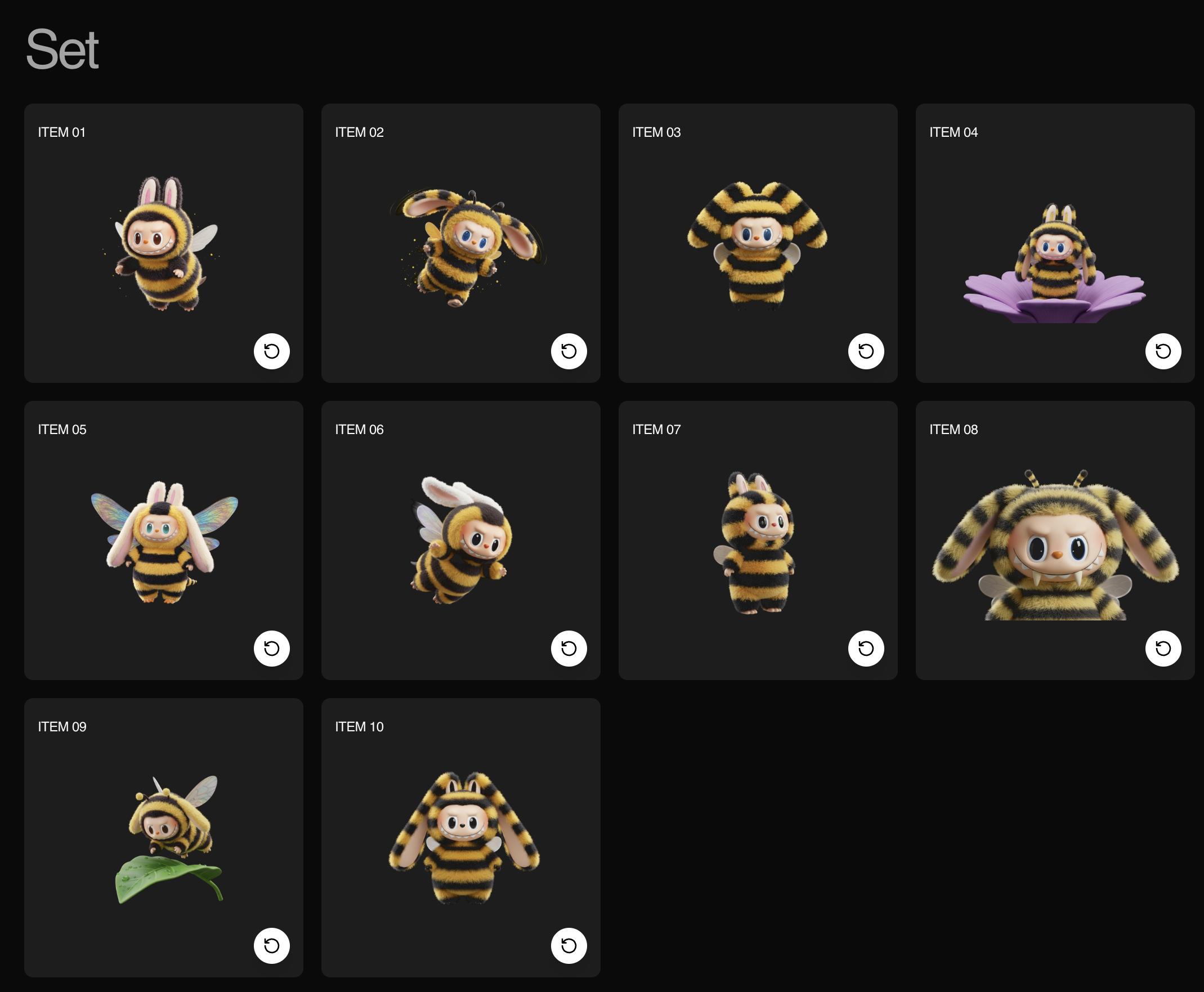

We built a novel consumer app for brands to rapidly experiment and iterate on new assets or product lines. Nano Banana uniquely enables apps & interfaces like this because of its style consistency (both between input & output images and across multiple calls) and generation speed.

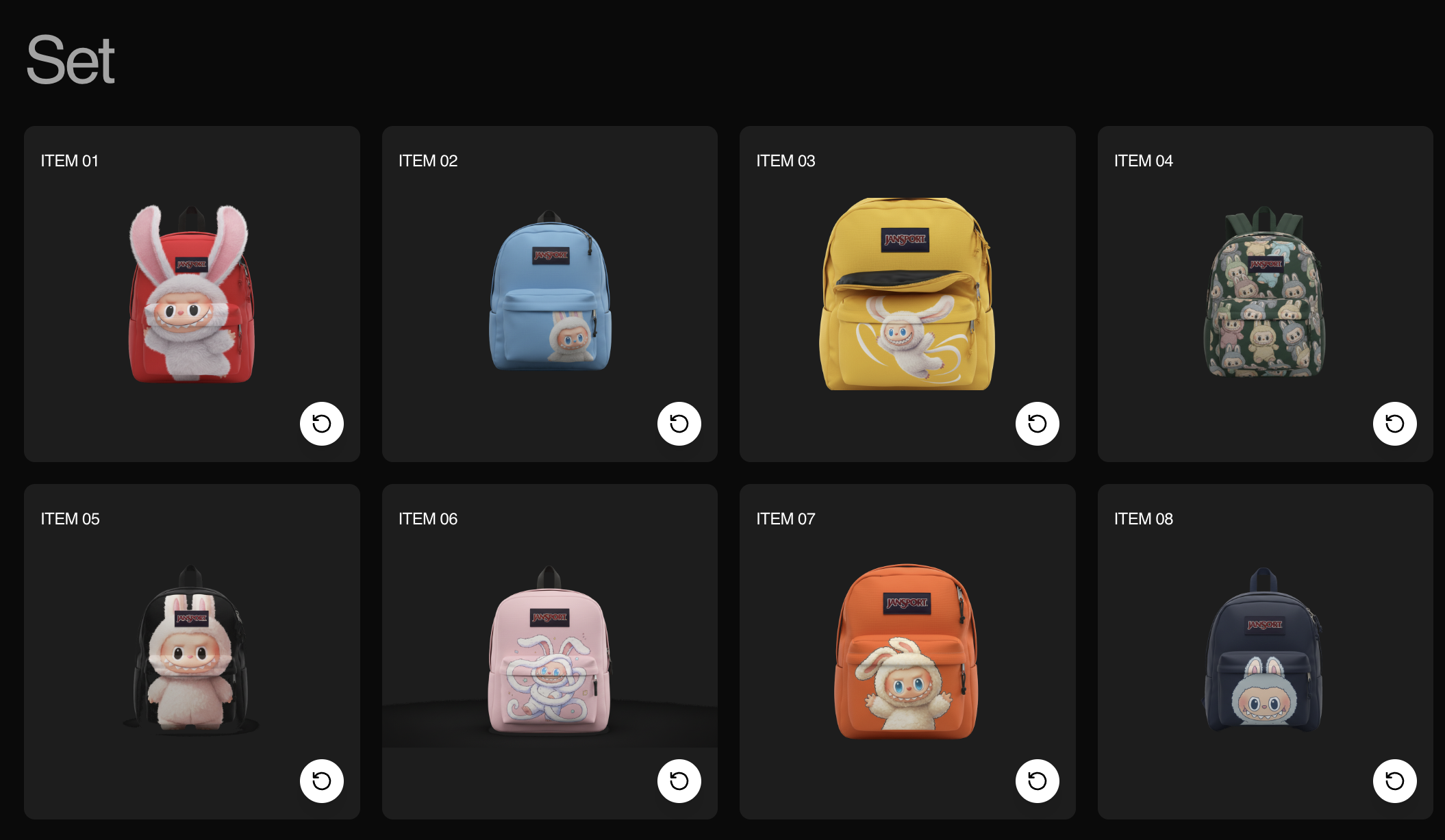

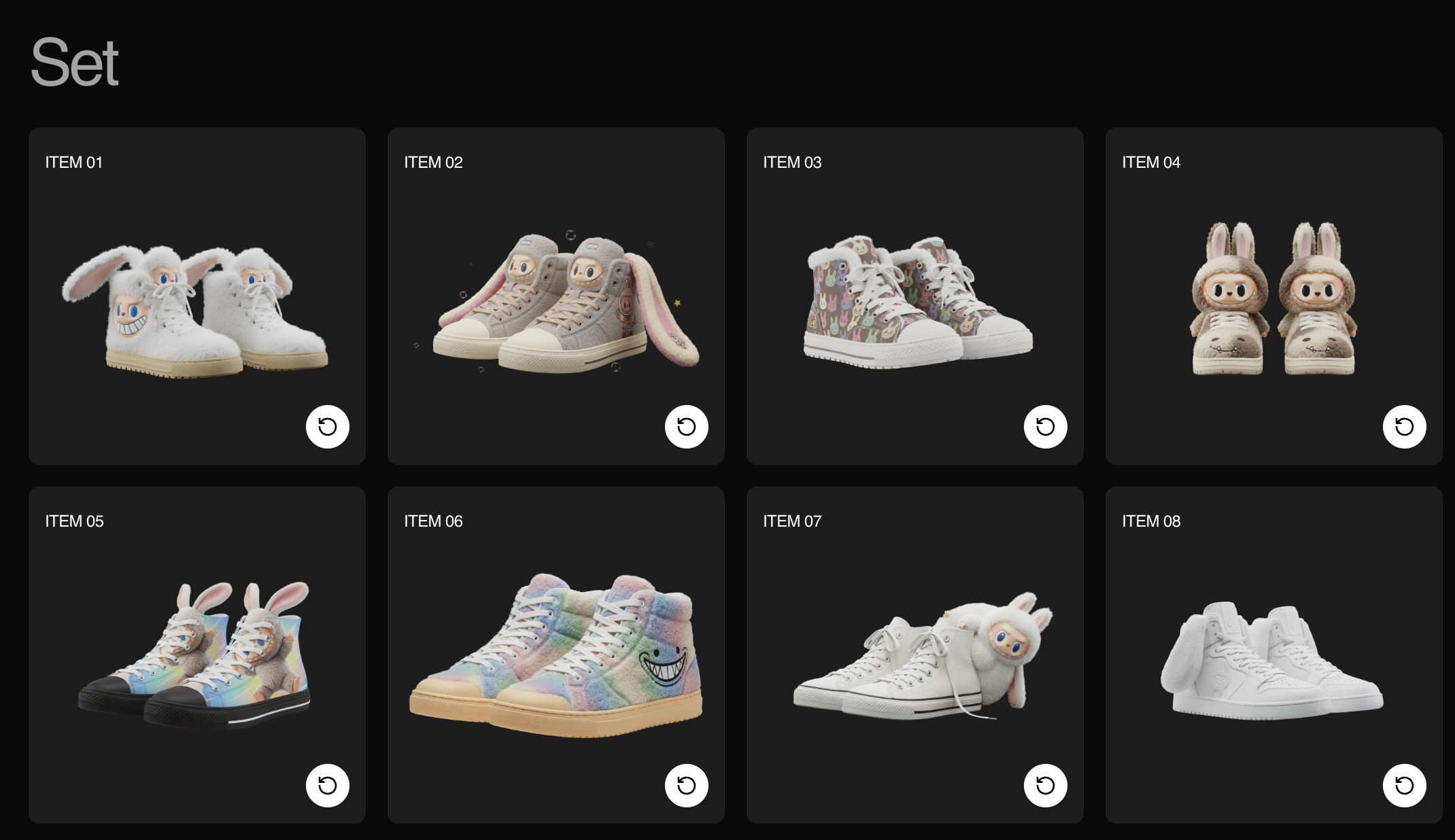

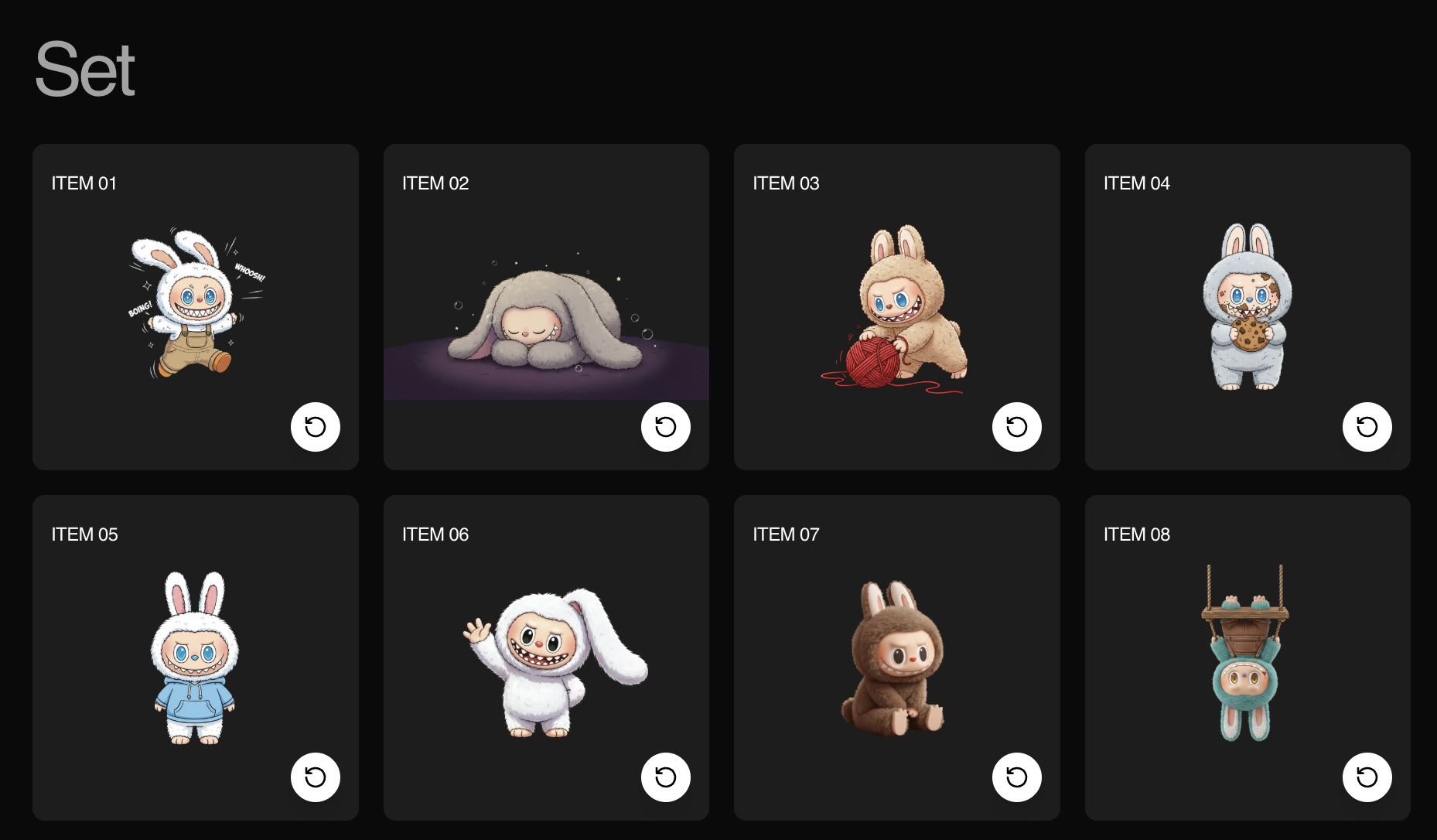

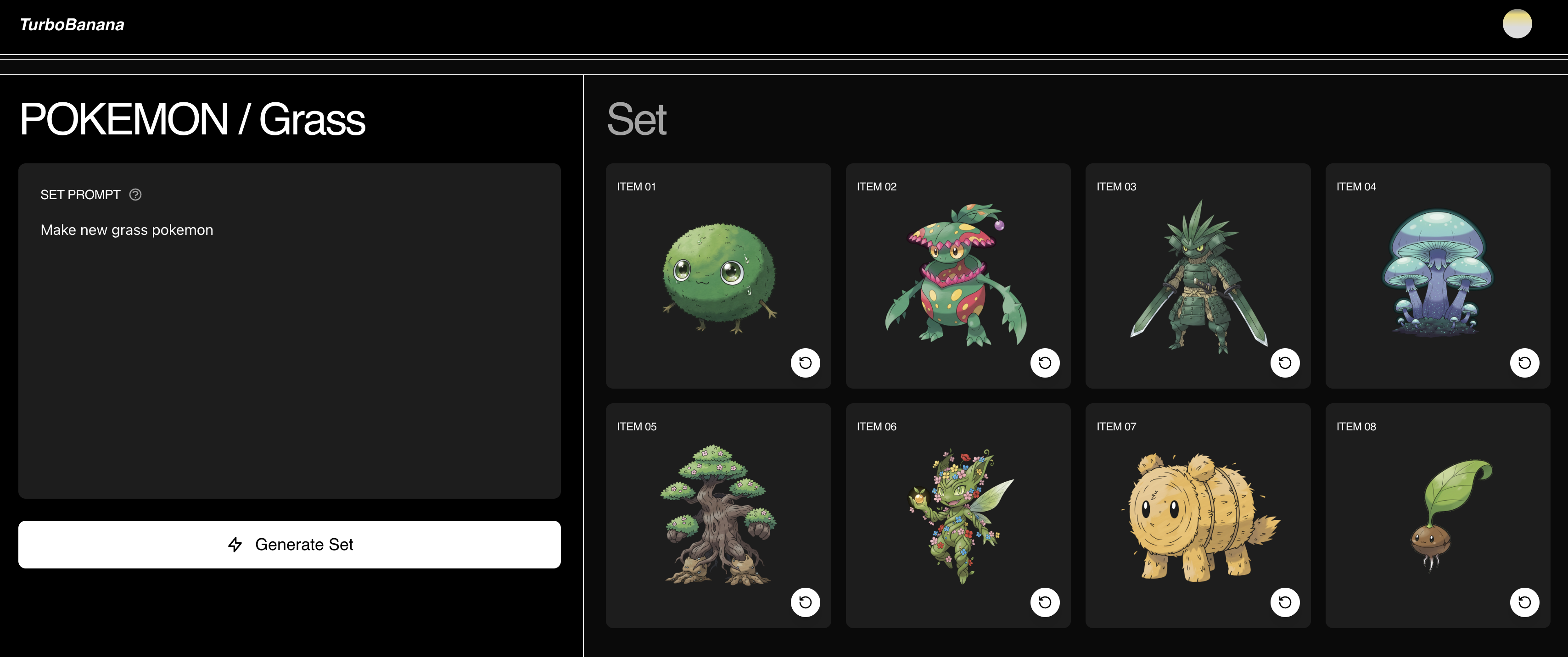

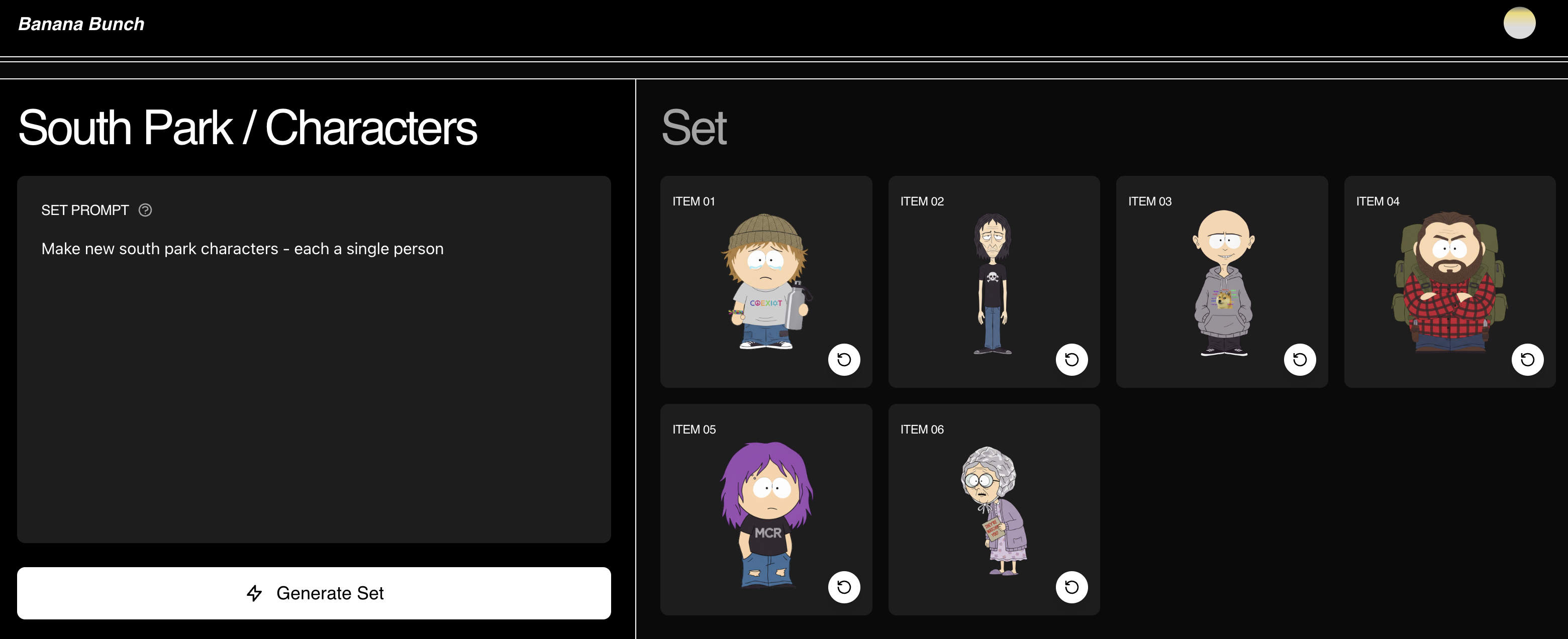

A project is a unified brand or style. The user uploads a few images as the “style guide” for all sets that are within that project. Sets are collections of things that align with that style, generated all at once from a text prompt (e.g. “Jansport backpacks”, “hightop sneakers”, “letters of the alphabet”). When a set is generated, the platform first generates a diverse set of text prompts using Gemini 2.5 Flash. Then, we feed those prompts into Nano Banana with the style guide — these calls are parallelized.

The [one style –> one product –> N generated variations] pipeline is great for experimentation or creating a lot of background assets within a particular “universe” (aka brand).

Labubus are an amazing example of a brand that is taking the world by storm and should continue to try to occupy as much mindshare as possible to increase their bottom line. Our platform makes it really easy to launch one-time campaigns (e.g. backpack partnerships for back-to-school season) and visually experiment with new products to guage initial consumer interest without investing too many internal company resources (e.g. design, manufacturing, etc.).

LABUBU-IFY EVERYTHING MWAHAHA!!

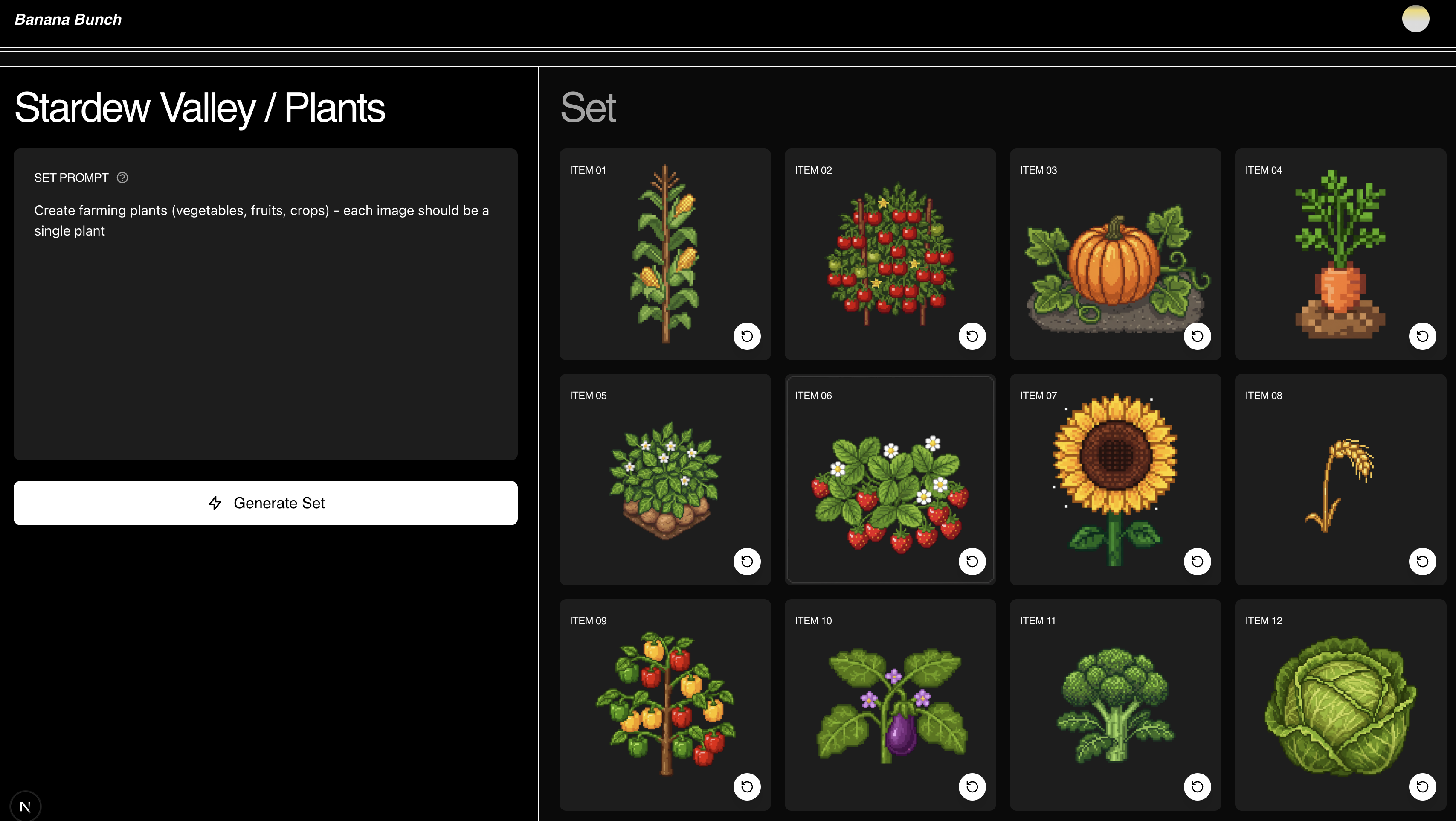

If you’re an indie 2D game developer and are bottlenecked by art (e.g. creating pixel art assets), you can use generate a bunch of sets that all match the style of your game universe. For example, for Stardew Valley, I made sets of plants, animals, and houses:

If you’re a creative and need inspiration for character design or need a bunch of “NPCs”, you might generate different sets of characters — like new grass pokemon or background characters for South Park.

**

One thing I really disliked about Google AI Studio is how slow the turn-taking chat interface is. This is a great pattern for when you are iterating on one thing, but horrible for iterating on many things under one theme. It’s incredibly slow / impossible to parallelize work, annoying to repeat prompts / image attachments for each generation, difficult to differentiate style vs. content, and no gallery view for seeing all outputs at once.

Even Sora and Midjourney haven’t nailed the best flow in my opinion, but I think they are still very good consumer interfaces that have the right pipeline for what they are trying to achieve right now (queues for background generation, social explore gallery, etc.).

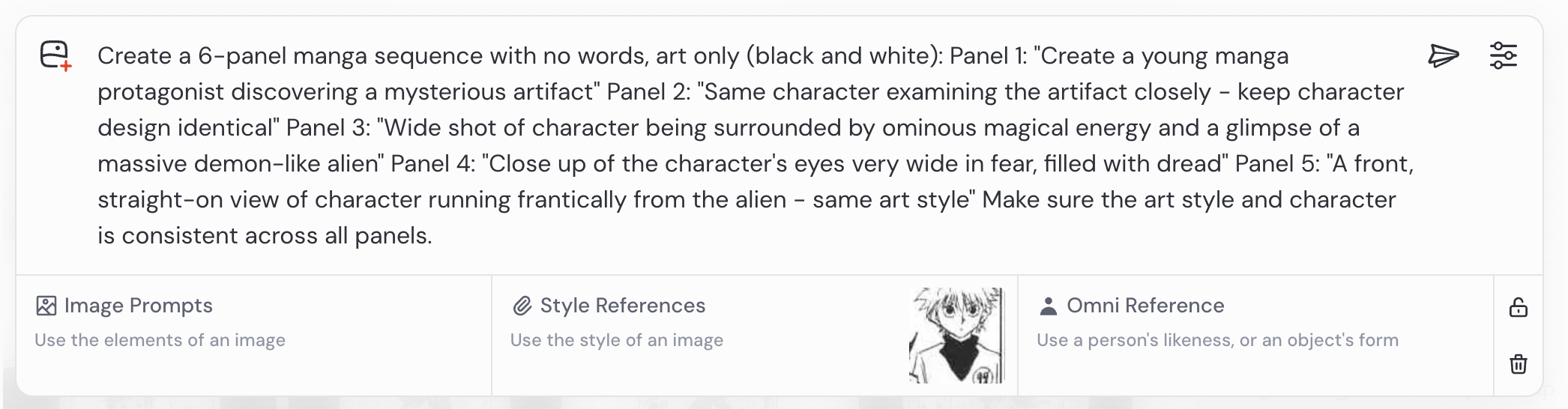

As mentioned in an earlier post, I really like Midjourney’s separation of image, style, and omni in their prompt editor. It makes it easier to specify what components the image model should focus on for what purpose – which sets user expectations and guides the model better for more reliable outputs:

Lots of these AI image editors are still in the “toy” stage, but we’re entering an era where the human-AI interface will determine winners and losers. As models commoditize, the interface becomes the differentiator. Building at the Nano Banana hackathon made me think about:

- Parallel creativity: Most AI tools assume single-threaded thinking, but creativity is about exploring multiple directions simultaneously. We need interfaces for creative worlds, not just individual outputs.

- Granular intent: Interfaces should handle the bulk of reasoning about inputs at the user input layer. Midjourney’s style/image/omni separation is a clearer mental model for users and offers better input guidance for models. These interfaces will probably vary depending on the use case (e.g. make a comic book will have character input stage, plot stage, etc.).

- Sketching speed: When generation is fast enough, AI becomes a co-collaborator in brainstorming rather than a service you commission. This gets ideas off the ground and lets you iterate faster.

Here’s the Github to our hackathon project!